Multi-armed bandit optimization makes testing faster and smarter with machine learning

Alex Vieler-Porter

Test faster, smarter, and more efficient. MAB optimization makes testing faster and smarter with machine learning. With multi-armed bandit experiments, optimizing your user experience is less resource-intensive while effectively increasing the number of conversions. The method also enables automated content selection which further saves resources.

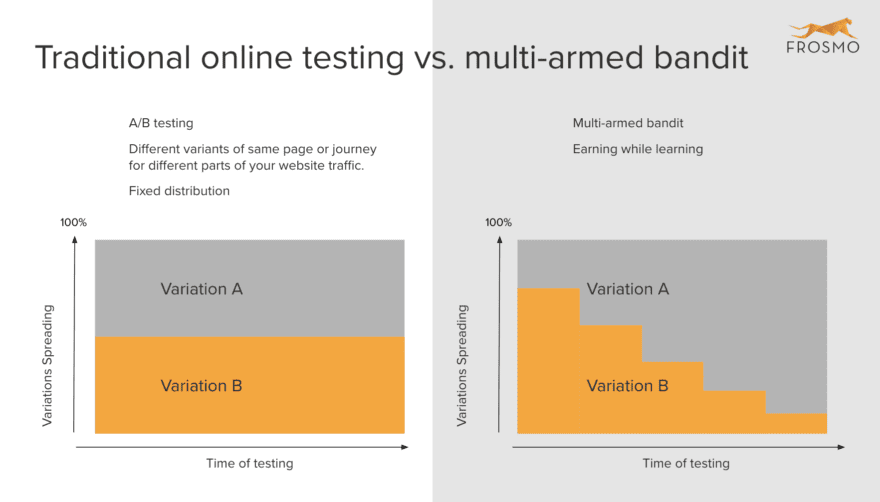

Multi-armed bandit experiments utilize machine learning and work by directing more traffic towards variations that have higher conversion rates, unlike A/B tests which direct set amounts of traffic towards each variation regardless of how well, or how poorly, they are converting.

With multi-armed bandit testing, the conversion rates of the variations are checked at regular intervals to adjust the amount of traffic sent to each. So even if a sub-optimal version gets a head start at the beginning of a test, this will be mitigated once the conversion rates are checked again.

Get results sooner and reduce lost conversions

Since a multi-armed bandit test provides results faster than an A/B test, the method is particularly well-suited for campaigns where you need answers quickly.

If you’re running a week-long contest, for example, then you’d need to know which offer would be the most appealing before the contest is over, so A/B tests are not an option. Not only would the multi-armed bandit provide you with the right results sooner, but because the variant that converts better gets shown to more users, you won’t miss out on conversions from the get-go. You’ll be earning while learning.

Interestingly, the multi-armed bandit method also lends itself very well for the opposite scenario. In cases where you’re not expected to reach statistical significance in months or even years, the benefits gained by deploying the multi-armed bandit over A/B testing will be even more extreme.

This situation is likely to happen when you’re testing so many variations that it takes a long time to show each variation to a significant amount of users. Multi-armed bandit experiments remedy this situation by eliminating low performers early on in the race and allocating traffic to potential winners. This results in less lost conversions and far less time wasted.

The final example is a situation where you’d let an experiment run indefinitely. Especially in situations where you have a large product catalog, it would make sense to promote a changing selection of products on the main page. By using a multi-armed bandit test, low-performing products would be automatically swapped out for new variations. Since you’d effectively create a high-performing and continuously updating selection, based on trends in buying behavior, you’ll see more conversions without having to do anything beyond the initial setup.

This is indeed machine learning.

Continuous improvement through faster and more frequent testing

Multi-armed bandit experiments are, in most cases, the way to go for businesses that want to get results faster. The possibility to run more complex tests, and test more often, will result in bigger returns. It’s automated and opens the door to continuous improvement. This makes using the method a no-brainer. The three examples above are just some of the ways multi-armed bandit experiments could be used in web development.

Ready to see Frosmo in action?

About the writer:

Alex Vieler-Porter has been working at Frosmo for nearly 6 years and opened the UK office in London in April 2016. He’s now focusing on helping companies define and execute their AI strategies and create unique user experiences. Aside from artificial intelligence, he’s passionate about IoT and the possibilities for new business models.